Datastream Technologies: Processing Real-Time Data

Datastream technologies set the stage for a captivating narrative, offering readers a glimpse into a world where data is processed in real-time, transforming the way we analyze and react to […]

Datastream technologies set the stage for a captivating narrative, offering readers a glimpse into a world where data is processed in real-time, transforming the way we analyze and react to information. These technologies are revolutionizing industries by enabling businesses to make data-driven decisions instantaneously, responding to changing market trends, and optimizing operations with unprecedented agility.

At its core, datastream processing involves continuously ingesting, processing, and analyzing data as it arrives, enabling organizations to gain valuable insights from a constant flow of information. This approach stands in contrast to traditional batch processing methods, where data is collected over time and analyzed in large batches, often leading to delays and hindering real-time decision-making.

Introduction to Datastream Technologies

Datastream technologies are revolutionizing how we handle and process data in real-time. These technologies are designed to process continuous, high-volume data streams, allowing for immediate insights and actions. This is in contrast to traditional batch processing, which involves collecting data over time and processing it in large chunks.

Key Characteristics of Datastream Processing

Datastream processing is characterized by its unique features, making it suitable for handling real-time data:

- Continuous Data Ingestion: Datastream technologies continuously ingest data from various sources, such as sensors, social media feeds, and financial markets. This enables real-time analysis and decision-making.

- Low Latency: Datastream processing emphasizes low latency, meaning that data is processed and analyzed quickly. This is crucial for applications requiring immediate responses, such as fraud detection and anomaly detection.

- Scalability: Datastream technologies are designed to scale horizontally, handling massive volumes of data efficiently. They can adapt to changing data volumes and processing demands.

- Fault Tolerance: Datastream processing systems are designed to be resilient and fault-tolerant. They can continue processing data even if components fail, ensuring data integrity and availability.

Real-World Applications of Datastream Technologies

Datastream technologies have numerous applications across various industries:

- Fraud Detection: Financial institutions use datastream technologies to detect fraudulent transactions in real-time by analyzing patterns and anomalies in transaction data.

- Real-Time Analytics: E-commerce companies leverage datastream technologies to provide personalized recommendations and optimize pricing based on real-time customer behavior and market trends.

- IoT Data Processing: Datastream technologies are essential for processing data from connected devices in the Internet of Things (IoT). They enable real-time monitoring, analysis, and control of IoT devices.

- Social Media Monitoring: Social media platforms use datastream technologies to monitor public sentiment, identify trending topics, and analyze user behavior in real-time.

- Cybersecurity: Datastream technologies are crucial for detecting and responding to cyberattacks in real-time. They analyze network traffic, log files, and other data sources to identify suspicious activities.

Types of Datastream Technologies

Datastream technologies can be categorized based on their processing approach, real-time capabilities, and application areas. Understanding these different types helps in selecting the most appropriate technology for specific use cases.

Real-Time vs. Batch Processing, Datastream technologies

Real-time and batch processing are two fundamental approaches to datastream processing.

- Real-time processing handles data as it arrives, providing immediate insights and responses. This approach is crucial for applications requiring low latency, such as fraud detection, anomaly detection, and real-time analytics.

- Batch processing, on the other hand, aggregates data over a period and processes it in batches, offering a more cost-effective solution for large-scale data analysis, reporting, and historical data exploration.

Popular Datastream Processing Frameworks

There are numerous datastream processing frameworks available, each with its strengths and weaknesses.

- Apache Kafka: Kafka is a distributed streaming platform widely used for building real-time data pipelines. It excels in high-throughput message ingestion, reliable data delivery, and fault tolerance. It is commonly used for event streaming, message queuing, and real-time data integration.

- Apache Flink: Flink is a powerful open-source framework for real-time data processing. It offers a unified platform for both batch and stream processing, enabling efficient data analysis and machine learning tasks. Flink is known for its high performance, scalability, and state management capabilities.

- Apache Spark Streaming: Spark Streaming is a micro-batch processing framework built on top of Apache Spark. It provides a flexible and scalable platform for real-time data analysis and processing. Spark Streaming is often used for real-time dashboards, ETL pipelines, and machine learning applications.

- Amazon Kinesis: Kinesis is a fully managed streaming service offered by Amazon Web Services. It provides a robust platform for real-time data ingestion, processing, and analysis. Kinesis is well-suited for applications requiring low latency, high scalability, and data durability.

Advantages and Disadvantages

Each type of datastream technology offers distinct advantages and disadvantages, influencing their suitability for specific use cases.

- Real-time Processing:

- Advantages: Provides immediate insights, enables low-latency applications, and facilitates real-time decision-making.

- Disadvantages: Can be resource-intensive, requires specialized infrastructure, and may introduce complexity in development.

- Batch Processing:

- Advantages: Cost-effective for large datasets, suitable for historical data analysis, and simplifies data processing tasks.

- Disadvantages: Limited real-time capabilities, delayed insights, and may not be suitable for time-sensitive applications.

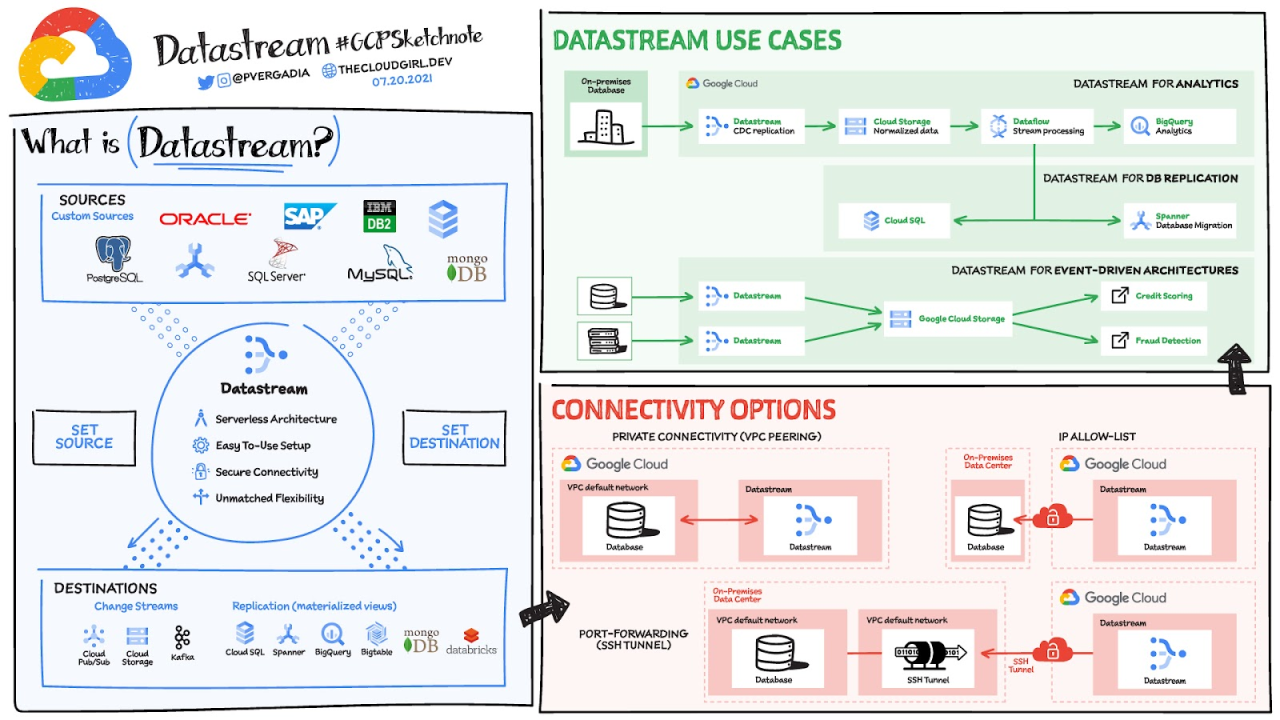

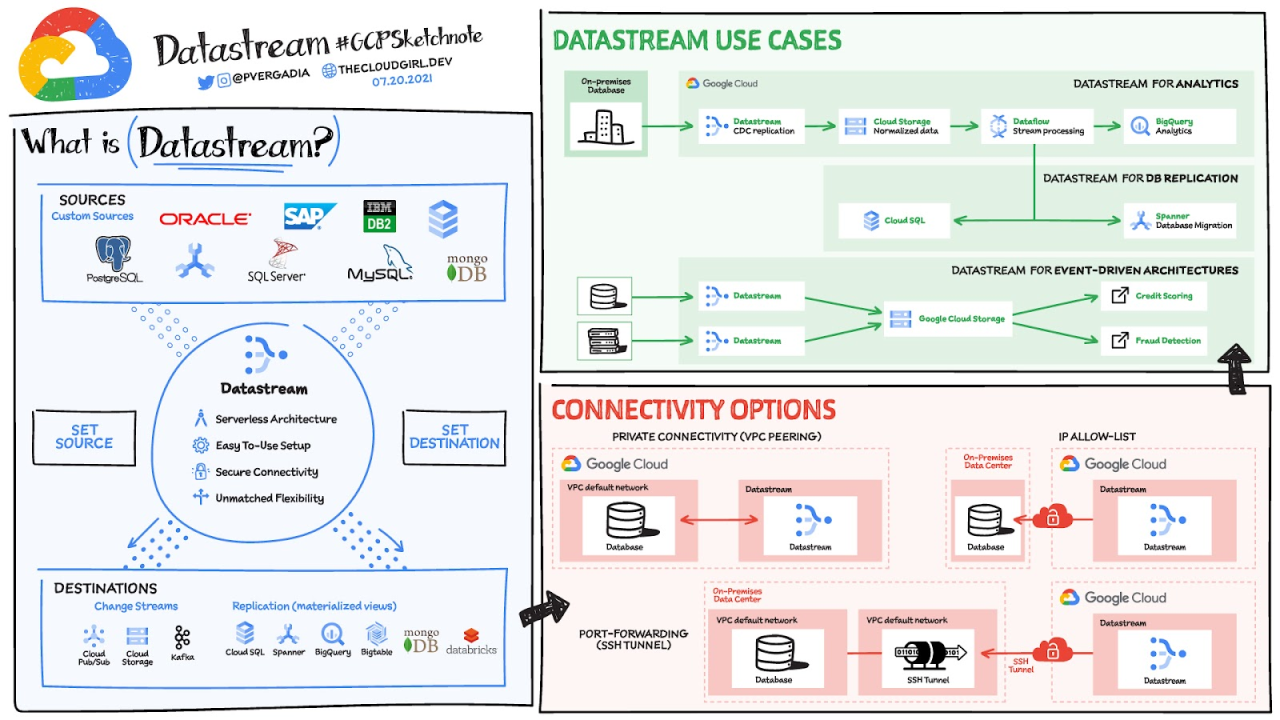

Datastream Architecture

Datastream processing systems are designed to handle continuous streams of data, requiring a specific architecture that enables efficient and real-time analysis. The architecture is designed to accommodate the unique characteristics of data streams, such as high volume, velocity, and variety.

This section delves into the typical architecture of a datastream processing system, exploring the essential components involved in datastream processing and providing a simplified illustration of the architecture.

Components of Datastream Processing

The components of a datastream processing system work together to capture, process, and deliver insights from real-time data.

- Data Sources: Data sources generate the streams of data that are processed by the system. These sources can be diverse, ranging from sensors and social media platforms to financial trading systems and IoT devices.

- Data Ingestion: The data ingestion layer is responsible for capturing data from various sources and delivering it to the processing engine. This layer handles tasks such as data validation, transformation, and buffering.

- Data Processing Engine: The heart of the system, the processing engine performs computations on the incoming data streams. It uses various techniques, including windowing, aggregation, and filtering, to extract meaningful insights.

- Data Storage: Processed data is stored in a persistent data store for historical analysis, reporting, and other downstream applications. This layer can utilize different storage technologies, including relational databases, NoSQL databases, and data lakes.

- Data Output: The data output layer delivers processed data to consumers, such as dashboards, applications, and other systems. This layer can utilize various output mechanisms, including APIs, message queues, and real-time visualization tools.

Datastream Processing Architecture Diagram

A simple datastream processing architecture can be visualized as follows:

Data Sources --> Data Ingestion --> Data Processing Engine --> Data Storage --> Data Output

Datastream Processing Techniques: Datastream Technologies

Datastream processing techniques are essential for extracting meaningful insights from the continuous flow of data. These techniques allow us to analyze and manipulate data in real-time, enabling us to make informed decisions based on the latest information.

Windowing

Windowing is a technique that divides the continuous data stream into smaller, manageable chunks called windows. This allows for efficient processing and analysis of data within a specific timeframe.

Types of Windows

Windowing techniques can be categorized based on the way they define the data chunks:

- Tumbling Windows: These windows have a fixed size and are non-overlapping. For example, a 1-minute tumbling window would process data in 1-minute intervals, starting from the beginning of each minute.

- Sliding Windows: These windows have a fixed size but can overlap. For example, a 5-second sliding window with a 2-second step would process data in 5-second intervals, moving forward by 2 seconds after each processing cycle.

- Session Windows: These windows group data based on a specific session or activity. For example, a user session window would include all data points generated during a user’s interaction with a website or application.

Real-World Applications

Windowing techniques are widely used in various applications, including:

- Real-time Analytics: Windowing enables the analysis of data within specific timeframes, allowing for the identification of trends and patterns in real-time.

- Fraud Detection: Windowing can be used to detect suspicious activity by analyzing transactions within a specific time window.

- Network Monitoring: Windowing allows for the monitoring of network traffic patterns within defined timeframes, helping to identify anomalies and potential issues.

Aggregation

Aggregation involves combining data points from a stream into a single value or summary statistic. This helps to simplify and condense the data, making it easier to analyze and understand.

Types of Aggregations

Common aggregation techniques include:

- Sum: Adding up all the values in a window.

- Average: Calculating the mean value of data points in a window.

- Count: Counting the number of data points in a window.

- Min/Max: Finding the minimum or maximum value within a window.

Real-World Applications

Aggregation techniques are used in various real-world scenarios, including:

- Sales Analysis: Aggregating sales data by time period can provide insights into sales trends and customer behavior.

- Traffic Monitoring: Aggregating traffic data can help to identify peak hours and traffic patterns.

- Sensor Data Analysis: Aggregating sensor data can provide insights into environmental conditions and equipment performance.

Filtering

Filtering is a technique that removes unwanted data points from a stream based on specific criteria. This helps to focus analysis on relevant data and improve efficiency.

Types of Filters

Common filtering techniques include:

- Value-based Filtering: Filtering data based on specific values, such as selecting only data points above a certain threshold.

- Time-based Filtering: Filtering data based on time criteria, such as selecting only data points within a specific time window.

- Pattern-based Filtering: Filtering data based on specific patterns or sequences, such as identifying anomalies or outliers.

Real-World Applications

Filtering techniques are widely used in various applications, including:

- Spam Detection: Filtering email messages based on s or patterns to identify and block spam.

- Network Intrusion Detection: Filtering network traffic based on suspicious patterns to identify and block potential attacks.

- Data Cleaning: Filtering out erroneous or incomplete data points to improve data quality and accuracy.

Datastream Applications

Datastream technologies are widely used in various industries, revolutionizing how organizations process and analyze data in real-time. These technologies enable businesses to gain valuable insights from streaming data, leading to improved decision-making, optimized operations, and enhanced customer experiences.

Datastream Applications in E-commerce

Datastream technologies play a vital role in optimizing e-commerce operations. They are used to analyze customer behavior, predict demand, and personalize shopping experiences.

- Real-time Recommendations: Datastream technologies can analyze customer browsing history, purchase patterns, and other real-time data to provide personalized product recommendations. This helps increase sales by suggesting relevant products that customers are likely to be interested in.

- Fraud Detection: E-commerce companies use datastream technologies to detect fraudulent transactions in real-time. By analyzing data like purchase history, IP addresses, and payment information, they can identify suspicious activities and prevent financial losses.

- Inventory Management: Datastream technologies help optimize inventory levels by analyzing real-time sales data, demand forecasts, and supply chain information. This enables companies to avoid stockouts and overstocking, reducing costs and improving customer satisfaction.

Datastream Applications in Finance

Datastream technologies are essential in the financial industry for real-time risk management, fraud detection, and algorithmic trading.

- Real-time Risk Management: Financial institutions use datastream technologies to monitor market data, financial news, and trading activities in real-time. This helps them identify and mitigate risks quickly, preventing significant financial losses.

- Algorithmic Trading: Datastream technologies are used to develop and execute automated trading strategies. By analyzing market data and historical trends, algorithms can identify profitable trading opportunities and execute trades automatically, often with higher speed and accuracy than human traders.

- Fraud Detection: Financial institutions use datastream technologies to detect fraudulent transactions in real-time. By analyzing data like transaction history, account activity, and IP addresses, they can identify suspicious patterns and prevent financial crimes.

Datastream Applications in Healthcare

Datastream technologies are transforming healthcare by enabling real-time patient monitoring, disease prediction, and personalized treatment plans.

- Real-time Patient Monitoring: Datastream technologies can monitor vital signs, medical devices, and patient data in real-time. This allows healthcare professionals to identify potential health issues early and intervene quickly, improving patient outcomes.

- Disease Prediction: Datastream technologies can analyze patient data, medical records, and environmental factors to predict the likelihood of developing certain diseases. This enables early intervention and preventive measures, improving public health outcomes.

- Personalized Treatment Plans: Datastream technologies can analyze patient data to develop personalized treatment plans based on individual needs and preferences. This can improve treatment effectiveness and reduce side effects.

Datastream Applications in Manufacturing

Datastream technologies are used in manufacturing to optimize production processes, improve quality control, and enhance predictive maintenance.

- Predictive Maintenance: Datastream technologies can analyze sensor data from machines and equipment to predict potential failures. This allows for proactive maintenance, reducing downtime and improving operational efficiency.

- Quality Control: Datastream technologies can monitor production processes in real-time and identify defects or deviations from quality standards. This helps ensure product quality and reduces waste.

- Production Optimization: Datastream technologies can analyze data from production lines, supply chain, and customer demand to optimize production schedules and resource allocation. This improves efficiency and reduces costs.

Challenges in Datastream Technologies

Datastream technologies, while powerful, present several challenges that require careful consideration and appropriate solutions. These challenges can arise from various factors, including the sheer volume and velocity of data, the need for real-time processing, and the complexities of distributed systems.

Handling High-Volume Data

High-volume data streams are a common characteristic of datastream technologies. The sheer volume of data can overwhelm traditional processing methods, leading to performance bottlenecks and delays.

- Scalability: Traditional databases and data processing systems often struggle to handle the massive amount of data generated by data streams. This can lead to performance degradation and even system failures.

- Storage: Storing large volumes of data streams can be a significant challenge, requiring specialized storage solutions that can handle high write rates and low latency.

- Cost: The cost of storing and processing large volumes of data can be substantial, especially for organizations with limited resources.

To overcome these challenges, organizations can adopt scalable and distributed storage solutions like NoSQL databases, cloud-based storage services, and data compression techniques.

Real-Time Processing

Datastream technologies often require real-time processing to extract insights and make decisions quickly.

- Latency: Processing delays can have significant consequences, especially in applications where real-time insights are crucial. For example, in fraud detection systems, delays in processing transactions can lead to fraudulent activities going undetected.

- Concurrency: Handling multiple data streams concurrently can be challenging, requiring efficient scheduling and resource allocation to ensure timely processing.

- Data Integrity: Maintaining data integrity in real-time processing is critical, as any errors can lead to inaccurate results and incorrect decisions.

To address these challenges, organizations can implement techniques like parallel processing, distributed computing, and in-memory databases.

Datastream Architecture

Designing and implementing a robust datastream architecture is crucial for handling the complexities of data stream processing.

- Fault Tolerance: Datastream systems need to be fault-tolerant to handle failures in individual nodes or components. This can be achieved through techniques like replication, redundancy, and distributed consensus algorithms.

- Data Consistency: Maintaining data consistency across distributed systems can be challenging, especially when dealing with high volumes of data. This requires careful consideration of data synchronization mechanisms and consistency models.

- Security: Data streams often contain sensitive information that needs to be protected from unauthorized access and manipulation. This requires implementing strong security measures, including data encryption, access control, and intrusion detection.

Organizations can address these challenges by adopting microservices architectures, using containerization technologies, and implementing robust security protocols.

Datastream Processing Techniques

Selecting the appropriate datastream processing techniques is essential for efficient and effective data analysis.

- Windowing: Windowing techniques allow data to be processed in smaller chunks, enabling real-time analysis without having to process the entire stream at once. Different windowing techniques, such as tumbling, sliding, and session windows, offer varying levels of granularity and flexibility.

- Aggregation: Aggregation techniques are used to summarize data streams, reducing the volume of data that needs to be processed. Common aggregation operations include sum, average, count, and min/max.

- Filtering: Filtering techniques allow specific data points to be selected from a stream based on certain criteria. This can be used to focus on relevant data and reduce the computational overhead.

The choice of processing techniques depends on the specific requirements of the application and the characteristics of the data stream.

Datastream Applications

Datastream technologies are widely used in various applications, each presenting its own unique challenges.

- Real-time Analytics: Datastream technologies are used to analyze data in real time, providing insights that can be used to make timely decisions. For example, in financial markets, real-time analysis of stock prices can help traders identify opportunities and mitigate risks.

- Fraud Detection: Datastream technologies are used to detect fraudulent activities in real time by analyzing patterns in transactions and user behavior. This can be used to prevent financial losses and protect customers.

- Internet of Things (IoT): Datastream technologies are used to process data from IoT devices, enabling real-time monitoring and control of physical assets. For example, in smart homes, data streams from sensors can be used to optimize energy consumption and improve comfort.

These applications highlight the importance of addressing the challenges associated with datastream technologies to unlock their full potential.

Future Trends in Datastream Technologies

Datastream technologies are constantly evolving, driven by the increasing volume, velocity, and variety of data being generated. This rapid evolution is leading to new trends that are shaping the future of data processing. These trends are not only changing how we collect and analyze data but also how we leverage it to make better decisions and create new opportunities.

The Rise of Real-Time Analytics

Real-time analytics is becoming increasingly important as businesses strive to gain insights from data as it is generated. This trend is driven by the need for faster decision-making and the ability to react to events in real-time. Real-time analytics enables organizations to identify patterns, anomalies, and trends in data streams, allowing them to make informed decisions in a timely manner. For example, financial institutions use real-time analytics to detect fraudulent transactions, while e-commerce companies use it to personalize customer experiences.

Conclusive Thoughts

As we navigate the ever-increasing volume and velocity of data in today’s digital landscape, datastream technologies emerge as a powerful tool for harnessing the power of real-time insights. By embracing these technologies, organizations can unlock new opportunities for innovation, efficiency, and competitive advantage, transforming the way they operate and interact with the world around them.

Datastream technologies are revolutionizing how we collect and analyze information, leading to more efficient and insightful decision-making. Companies like roofix technologies llc are at the forefront of this revolution, leveraging datastream technologies to streamline their operations and deliver exceptional customer experiences.

By harnessing the power of data, businesses can unlock new opportunities and drive growth in the ever-evolving digital landscape.