Datastream Technologies: A Modern Approach to Data

Datastream technologies set the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. These technologies […]

Datastream technologies set the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. These technologies are revolutionizing the way we handle and analyze data, enabling us to gain real-time insights and make informed decisions like never before.

Imagine a world where data flows seamlessly, constantly updating and informing decisions. This is the promise of datastream technologies, which empower businesses to harness the power of continuous data streams for enhanced efficiency, improved customer experiences, and a competitive edge. From manufacturing to finance, healthcare to e-commerce, these technologies are transforming industries by enabling real-time analytics, predictive modeling, and personalized experiences.

Datastream Technologies

Datastream technologies encompass a range of tools and techniques that enable the continuous and real-time processing, analysis, and utilization of data as it is generated. These technologies are crucial in today’s data-driven world, where businesses and organizations need to make informed decisions quickly and efficiently.

Key Characteristics and Functionalities

Datastream technologies are characterized by their ability to handle high volumes of data in real time, allowing for immediate insights and actions. Key functionalities include:

- Data Ingestion: Efficiently collecting data from various sources, including sensors, applications, and databases.

- Data Transformation: Cleaning, enriching, and preparing data for analysis and processing.

- Data Analysis: Applying algorithms and techniques to extract meaningful patterns and insights from the data stream.

- Data Visualization: Presenting data in an easily understandable and actionable manner through dashboards and reports.

- Real-time Decision Making: Enabling organizations to react promptly to changing conditions and events based on real-time data analysis.

Types of Datastream Technologies

Datastream technologies encompass a diverse range of tools and techniques, each with specific applications and strengths. Some common types include:

- Message Queues: These technologies facilitate asynchronous communication between applications, enabling the reliable and efficient transmission of data streams. Examples include Apache Kafka and RabbitMQ.

- Stream Processing Engines: These platforms allow for real-time analysis and processing of data streams, enabling rapid insights and actions. Popular examples include Apache Flink and Apache Spark Streaming.

- Data Pipelines: These automated workflows orchestrate the flow of data from source to destination, enabling efficient data processing and analysis. Tools like Apache Airflow and Prefect are commonly used for this purpose.

- Time Series Databases: These specialized databases are designed to store and query time-series data, facilitating efficient analysis of data streams over time. Examples include InfluxDB and Prometheus.

Datastream Technologies in Action

Datastream technologies are not just theoretical concepts; they are actively shaping various industries, driving innovation and efficiency. These technologies are transforming how businesses collect, process, and utilize data, leading to improved decision-making and better customer experiences.

Real-World Examples of Datastream Technologies

The application of datastream technologies is vast and spans across numerous industries. Here are some real-world examples:

- E-commerce: Datastream technologies are vital for e-commerce platforms, allowing them to analyze customer behavior in real-time. By tracking browsing patterns, purchase history, and user interactions, businesses can personalize recommendations, optimize product placements, and provide targeted promotions, enhancing customer engagement and driving sales.

- Finance: In the financial sector, datastream technologies are used for fraud detection, risk assessment, and algorithmic trading. Real-time analysis of financial data enables banks and investment firms to identify suspicious transactions, assess creditworthiness, and execute trades with greater precision.

- Healthcare: Datastream technologies are revolutionizing healthcare by enabling real-time monitoring of patient vital signs, medication adherence, and disease progression. This allows healthcare providers to make informed decisions, intervene early in potential complications, and personalize treatment plans for better patient outcomes.

- Manufacturing: Datastream technologies are critical in manufacturing for optimizing production processes, predicting equipment failures, and improving quality control. By analyzing sensor data from machines and equipment, manufacturers can identify bottlenecks, predict maintenance needs, and ensure product quality, leading to increased efficiency and reduced downtime.

Case Studies of Datastream Technologies Implementation

Several case studies demonstrate the benefits and challenges of implementing datastream technologies:

- Netflix: Netflix leverages datastream technologies extensively for personalized recommendations, content creation, and customer engagement. By analyzing user viewing habits and preferences, Netflix provides tailored recommendations, optimizes content production, and personalizes the user experience, contributing to its immense success.

- Uber: Uber relies heavily on datastream technologies to manage its ride-hailing operations. Real-time data on driver availability, rider demand, and traffic conditions enables Uber to optimize pricing, dispatch drivers efficiently, and provide seamless user experiences, making it a dominant player in the ride-sharing market.

- Amazon: Amazon uses datastream technologies to personalize product recommendations, optimize inventory management, and improve customer service. By analyzing customer browsing and purchase history, Amazon provides personalized product suggestions, anticipates demand, and offers tailored customer support, enhancing the shopping experience and driving sales.

Hypothetical Scenario for Solving a Business Problem

Imagine a retail chain struggling with inventory management, experiencing stockouts and overstocking, leading to lost sales and increased costs.

- Problem: Inefficient inventory management, resulting in stockouts and overstocking.

- Solution: Implementing a datastream technology solution to analyze real-time sales data, customer demand, and supplier information.

- Benefits: By analyzing real-time data, the retail chain can optimize inventory levels, predict demand fluctuations, and minimize stockouts and overstocking. This leads to increased sales, reduced costs, and improved customer satisfaction.

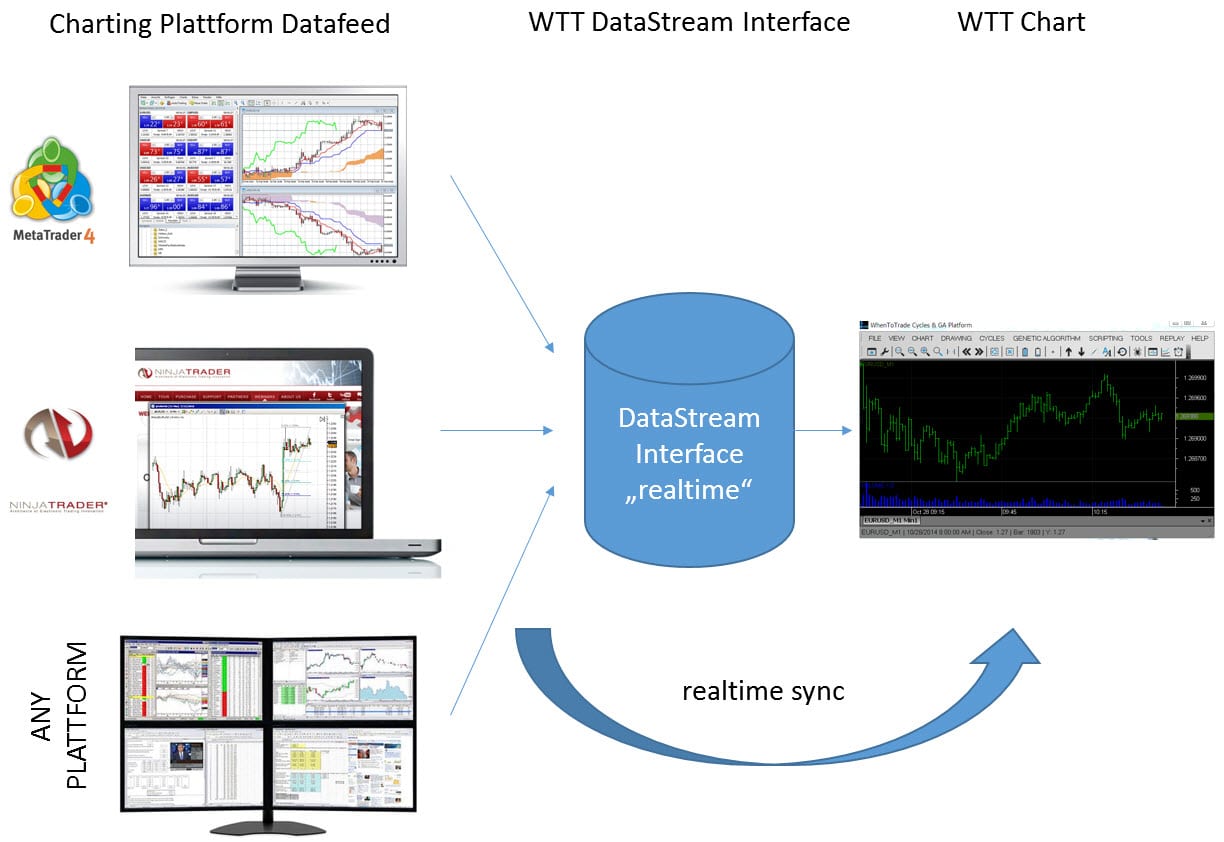

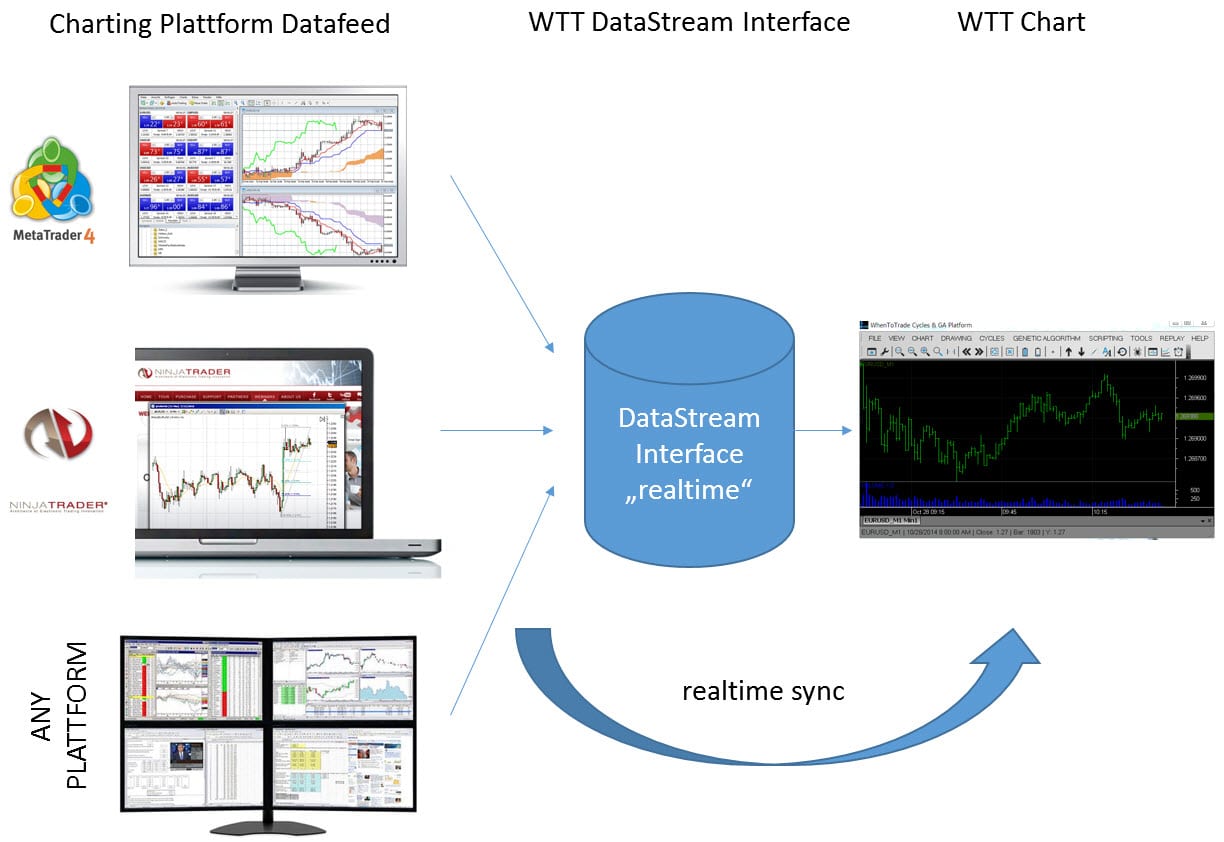

Architecture and Components of Datastream Technologies

Datastream technologies rely on a complex and sophisticated architecture to efficiently handle the high volume and velocity of data. These systems are typically composed of various components, each playing a crucial role in the ingestion, processing, and delivery of data streams.

Components of a Datastream Technology System

The components of a datastream technology system work together to capture, process, and deliver real-time data. Each component contributes to the overall functionality of the system.

| Component Name | Function | Examples |

|---|---|---|

| Data Source | Generates and emits data streams. | Sensors, IoT devices, social media feeds, financial trading platforms. |

| Data Ingestion | Collects data from sources and prepares it for processing. | Kafka, Apache Flume, Apache NiFi. |

| Data Processing | Transforms and analyzes data streams in real time. | Apache Spark Streaming, Apache Flink, Apache Storm. |

| Data Storage | Stores processed data for future analysis and retrieval. | Apache Cassandra, Apache HBase, Amazon Kinesis. |

| Data Delivery | Distributes processed data to consumers and applications. | Message queues, APIs, dashboards, machine learning models. |

Datastream Technologies and Data Analytics

Datastream technologies are essential for real-time data analytics, allowing organizations to gain insights from continuously flowing data and make timely decisions. These technologies enable the processing and analysis of data as it arrives, providing a dynamic and responsive approach to data understanding.

Real-Time Data Analytics

Datastream technologies facilitate real-time data analytics by enabling the continuous processing and analysis of data as it is generated. This real-time approach provides several advantages:

- Immediate Insights: Datastream technologies allow for the immediate analysis of data, providing insights as events occur. This enables organizations to react quickly to changing conditions and make informed decisions in real-time.

- Dynamic Decision Making: Real-time analytics empowers organizations to adapt their strategies and actions based on the latest data trends, leading to more agile and responsive decision-making.

- Early Detection and Prevention: By continuously monitoring data streams, organizations can identify anomalies and potential issues early on, allowing for proactive intervention and prevention of negative outcomes.

Challenges of Analyzing Data Streams

Analyzing data streams presents unique challenges due to the continuous flow of data:

- Data Velocity: The high speed at which data arrives requires efficient processing and analysis techniques to handle the volume and keep up with the data flow.

- Data Variety: Data streams often consist of diverse data types and formats, necessitating flexible and adaptable processing methods to handle different data structures.

- Data Volume: The sheer volume of data generated by data streams can pose significant challenges for storage, processing, and analysis. Efficient data management strategies are crucial to handle the scale of data.

Addressing Challenges

To address these challenges, datastream technologies employ various strategies:

- Stream Processing Engines: These engines are specifically designed to handle the high volume, velocity, and variety of data streams, providing real-time processing and analysis capabilities. Examples include Apache Flink, Apache Kafka Streams, and Apache Spark Streaming.

- Distributed Computing: By distributing processing tasks across multiple nodes, datastream technologies can handle large volumes of data and achieve faster processing times.

- Data Aggregation and Summarization: Techniques like windowing and aggregation are used to summarize data streams and reduce the volume of data that needs to be processed, making analysis more manageable.

Data Analytics Techniques for Data Streams, Datastream technologies

Datastream technologies enable the application of various data analytics techniques to extract meaningful insights from continuous data:

- Real-Time Anomaly Detection: Techniques like statistical outlier detection and machine learning algorithms can be used to identify unusual patterns and anomalies in data streams, providing early warnings of potential issues.

- Real-Time Trend Analysis: Data stream analytics can be used to track trends and patterns in real-time, allowing organizations to understand evolving customer behavior, market dynamics, and other key indicators.

- Real-Time Predictive Modeling: Machine learning models can be trained on data streams to predict future events, such as customer churn, equipment failure, or demand forecasting, enabling proactive decision-making.

- Real-Time Event Correlation: Data streams can be analyzed to identify relationships and correlations between different events, providing a deeper understanding of complex situations and enabling more informed responses.

Emerging Trends in Datastream Technologies

The field of datastream technologies is constantly evolving, driven by advancements in computing power, data storage, and data analytics. Emerging trends are shaping the future of datastream technologies, enabling new possibilities for real-time data processing and analysis.

Edge Computing

Edge computing brings data processing closer to the source of data generation, reducing latency and enabling faster decision-making. This approach is particularly beneficial for applications that require real-time insights, such as autonomous vehicles, industrial automation, and smart cities.

- Reduced Latency: By processing data closer to the source, edge computing minimizes the time it takes for data to travel to a centralized data center, resulting in faster response times.

- Improved Bandwidth Utilization: Edge computing reduces the amount of data that needs to be transmitted over the network, improving bandwidth utilization and reducing network congestion.

- Enhanced Data Security: Processing data at the edge can enhance data security by reducing the need to transmit sensitive information over long distances.

Edge computing is transforming datastream technologies by enabling real-time data processing and analysis at the edge, leading to more responsive and efficient systems.

Real-Time Machine Learning

Real-time machine learning allows models to learn and adapt to changing data patterns in real time, enabling faster and more accurate predictions. This approach is crucial for applications where data patterns are dynamic and require immediate insights, such as fraud detection, personalized recommendations, and predictive maintenance.

- Dynamic Model Updates: Real-time machine learning models can continuously learn from new data, adapting to changing patterns and improving their accuracy over time.

- Improved Decision-Making: By providing real-time insights, real-time machine learning enables faster and more informed decision-making, leading to better outcomes.

- Enhanced Efficiency: Real-time machine learning can automate processes, optimize resource allocation, and improve operational efficiency.

Real-time machine learning is revolutionizing datastream technologies by enabling models to learn and adapt in real time, leading to more intelligent and responsive systems.

Data Streaming with Blockchain

Blockchain technology provides a secure and transparent platform for data streaming, ensuring data integrity and provenance. This approach is particularly relevant for applications where data security and trust are paramount, such as supply chain management, financial transactions, and healthcare data sharing.

- Enhanced Data Security: Blockchain’s decentralized and immutable nature provides a secure platform for data streaming, reducing the risk of data tampering or unauthorized access.

- Improved Data Transparency: Blockchain technology allows for auditable and verifiable data streams, increasing transparency and accountability.

- Streamlined Data Sharing: Blockchain can facilitate secure and efficient data sharing between different parties, enabling collaboration and innovation.

Blockchain is transforming datastream technologies by providing a secure and transparent platform for data streaming, enhancing data integrity and trust.

Datastream Technologies for the Internet of Things (IoT)

The Internet of Things (IoT) is generating vast amounts of data from connected devices, creating a need for efficient and scalable datastream technologies to manage and analyze this data.

- Real-Time Data Processing: Datastream technologies are essential for processing and analyzing IoT data in real time, enabling timely insights and actions.

- Scalability and Reliability: IoT data streams can be massive and unpredictable, requiring datastream technologies that are scalable and reliable to handle the volume and velocity of data.

- Data Security and Privacy: Datastream technologies must address the security and privacy concerns associated with IoT data, protecting sensitive information and ensuring compliance with regulations.

Datastream technologies are playing a crucial role in enabling the growth and success of the Internet of Things by providing the infrastructure for managing and analyzing the massive amounts of data generated by connected devices.

Datastream Technologies and Artificial Intelligence (AI)

Artificial intelligence (AI) is increasingly being used in datastream technologies to enhance data analysis and decision-making. AI algorithms can be trained on data streams to identify patterns, make predictions, and automate tasks.

- Automated Data Analysis: AI algorithms can automate the process of data analysis, identifying patterns and insights that might be missed by human analysts.

- Real-Time Decision-Making: AI-powered datastream technologies can enable real-time decision-making, automating tasks and responding to events as they occur.

- Personalized Experiences: AI can be used to personalize user experiences, tailoring recommendations and services based on real-time data streams.

The integration of AI with datastream technologies is creating new opportunities for intelligent and automated systems, transforming how we interact with data and make decisions.

Concluding Remarks: Datastream Technologies

As we navigate the ever-evolving landscape of data, datastream technologies emerge as a powerful tool for harnessing the potential of continuous information flow. They are not just about processing data; they are about transforming it into actionable insights, enabling us to make informed decisions in a rapidly changing world. The future of data is dynamic, and datastream technologies are at the forefront of this exciting evolution, paving the way for a more data-driven and intelligent future.

Datastream technologies are revolutionizing the way we process and analyze information, particularly in the legal field. A key player in this revolution is the trial technology consultant , who helps lawyers leverage these tools for optimal case preparation and presentation.

By understanding the capabilities of datastream technologies, legal professionals can gain valuable insights and present compelling evidence to juries and judges.