Unlocking AI Potential: The Technology Stack

The AI technology stack is the foundation upon which powerful artificial intelligence applications are built. It encompasses a collection of tools, technologies, and processes that work together to enable the […]

The AI technology stack is the foundation upon which powerful artificial intelligence applications are built. It encompasses a collection of tools, technologies, and processes that work together to enable the development, training, deployment, and monitoring of AI models. From data acquisition to model evaluation, each component plays a crucial role in the journey of transforming raw data into intelligent insights.

Understanding the AI technology stack is essential for anyone involved in AI development, implementation, or utilization. Whether you’re a data scientist, software engineer, business leader, or simply curious about the workings of AI, this guide provides a comprehensive overview of the key components, trends, and considerations within this complex and rapidly evolving landscape.

Defining the AI Technology Stack

Think of an AI technology stack as the building blocks that make up an artificial intelligence system. Just like a traditional software stack has layers for different functionalities, an AI stack has components that work together to process data, learn from it, and make intelligent decisions.

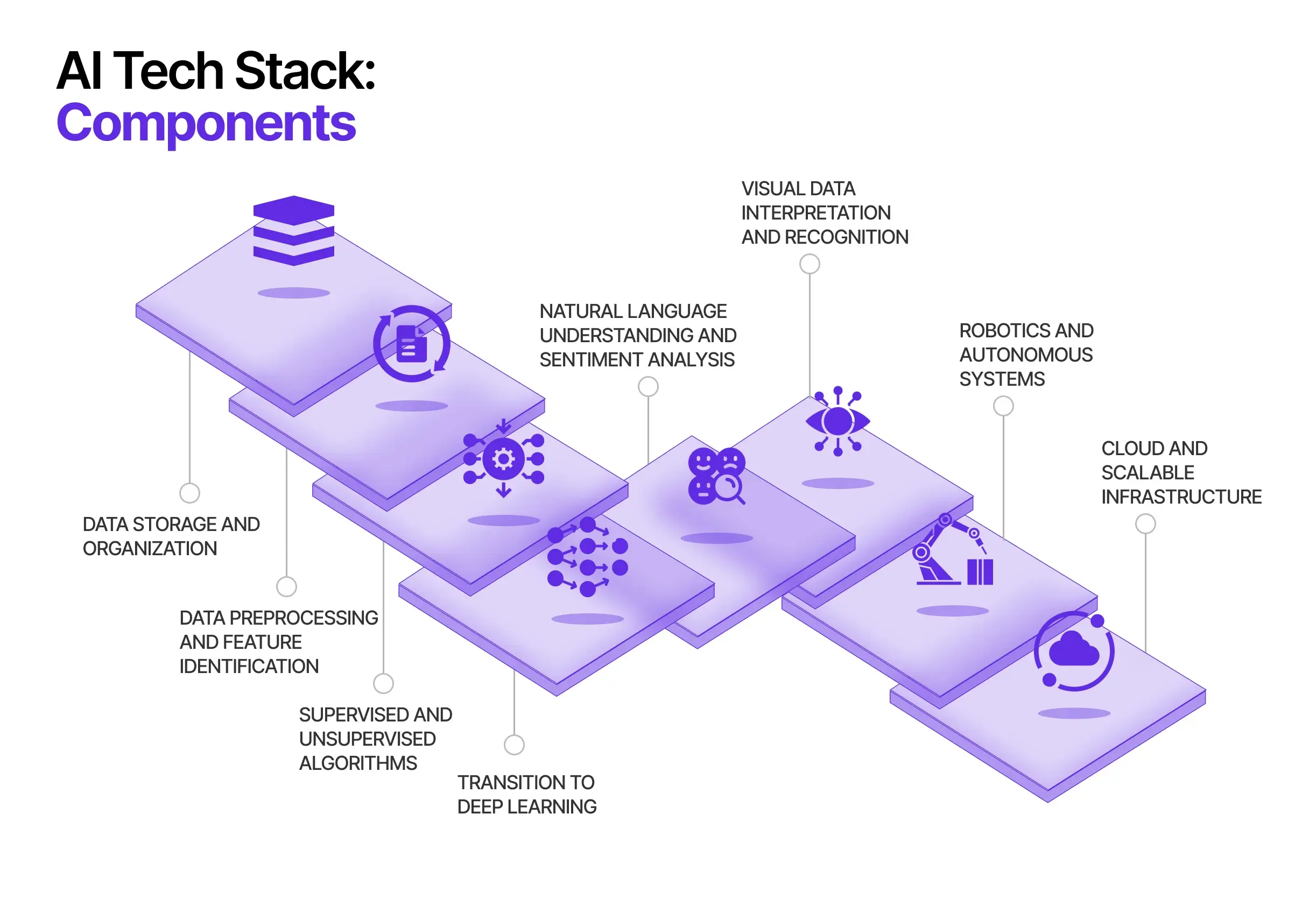

Components of an AI Technology Stack

The components of an AI technology stack are interconnected and work together to achieve the desired AI outcomes. These components can be broadly categorized as follows:

- Data Infrastructure: This layer focuses on collecting, storing, and managing the data that fuels AI models. It includes data sources, data lakes, data warehouses, and data pipelines.

- Data Preparation and Feature Engineering: This layer prepares the raw data for AI model training by cleaning, transforming, and extracting relevant features. This involves tasks like data cleansing, normalization, and feature selection.

- Machine Learning Models: This layer houses the core AI algorithms that learn from the data and make predictions. It includes various types of machine learning models, such as supervised learning, unsupervised learning, and reinforcement learning models.

- Model Training and Evaluation: This layer involves training the machine learning models on the prepared data and evaluating their performance using metrics like accuracy, precision, and recall.

- Model Deployment and Monitoring: This layer focuses on deploying the trained models into production environments and continuously monitoring their performance to ensure accuracy and identify potential issues.

- AI Tools and Frameworks: This layer provides the tools and libraries that simplify the development, deployment, and management of AI solutions. Examples include TensorFlow, PyTorch, and scikit-learn.

- AI Applications and Integrations: This layer involves integrating AI models into existing applications or creating new AI-powered applications to solve specific business problems.

Examples of AI Technology Stacks in Different Industries

AI technology stacks are customized to address the specific needs and challenges of different industries. Here are some examples:

- Healthcare: In healthcare, AI stacks are used for tasks like disease diagnosis, drug discovery, and personalized medicine. These stacks often include data sources from electronic health records, medical imaging, and genomic data, along with machine learning models for image analysis, natural language processing, and predictive modeling.

- Finance: Financial institutions use AI stacks for fraud detection, risk assessment, and algorithmic trading. These stacks typically involve data from transactions, market data, and customer behavior, along with machine learning models for anomaly detection, sentiment analysis, and forecasting.

- Retail: Retailers leverage AI stacks for personalized recommendations, inventory management, and customer service. These stacks often incorporate data from customer purchases, website interactions, and social media, along with machine learning models for recommendation engines, demand forecasting, and chatbot interactions.

AI Technology Stack for Different Use Cases

The AI technology stack is not a one-size-fits-all solution. It’s crucial to choose the right tools and technologies based on the specific use case. Different AI applications require different combinations of technologies and expertise. This section will explore how the AI technology stack can be tailored for specific use cases, including Natural Language Processing (NLP), Computer Vision, Machine Learning (ML), Deep Learning (DL), and Predictive Analytics.

Natural Language Processing (NLP), Ai technology stack

Natural Language Processing (NLP) deals with the interaction between computers and human language. It enables computers to understand, interpret, and generate human language. NLP has applications in various fields, including chatbots, sentiment analysis, language translation, and text summarization.

- Text Preprocessing: This involves cleaning and preparing text data for analysis. Techniques include tokenization, stemming, and lemmatization.

- Feature Engineering: This step involves extracting meaningful features from text data. Techniques include bag-of-words, TF-IDF, and word embeddings.

- Machine Learning Models: NLP leverages various machine learning algorithms, including Support Vector Machines (SVMs), Naive Bayes, and Hidden Markov Models (HMMs). Deep learning models like Recurrent Neural Networks (RNNs) and Transformers are also widely used.

- Evaluation Metrics: Accuracy, precision, recall, and F1-score are commonly used to evaluate the performance of NLP models.

Computer Vision

Computer Vision enables computers to “see” and interpret images and videos. This field has applications in areas like object detection, image classification, facial recognition, and medical imaging.

- Image Preprocessing: This step involves cleaning and preparing image data for analysis. Techniques include resizing, cropping, and noise reduction.

- Feature Extraction: This involves extracting features from images. Techniques include Scale-Invariant Feature Transform (SIFT), Histogram of Oriented Gradients (HOG), and Convolutional Neural Networks (CNNs).

- Machine Learning Models: Computer vision utilizes various machine learning algorithms, including Support Vector Machines (SVMs), Random Forests, and K-Nearest Neighbors (KNN). Deep learning models like Convolutional Neural Networks (CNNs) are widely used for image classification and object detection.

- Evaluation Metrics: Accuracy, precision, recall, and F1-score are commonly used to evaluate the performance of computer vision models.

Machine Learning (ML)

Machine Learning (ML) is a subset of Artificial Intelligence (AI) that enables systems to learn from data without being explicitly programmed. It involves training algorithms on data to make predictions or decisions. ML has applications in various fields, including fraud detection, spam filtering, and recommendation systems.

- Data Collection and Preparation: This involves gathering and cleaning data for training and testing ML models. Data quality is crucial for accurate model performance.

- Feature Engineering: This involves selecting and transforming relevant features from data. Techniques include feature scaling, normalization, and dimensionality reduction.

- Model Selection and Training: ML utilizes various algorithms, including linear regression, logistic regression, decision trees, and support vector machines. The choice of algorithm depends on the specific problem and data characteristics.

- Model Evaluation and Optimization: Evaluating the performance of ML models involves using metrics like accuracy, precision, recall, and F1-score. Techniques like cross-validation and hyperparameter tuning are used to optimize model performance.

Deep Learning (DL)

Deep Learning (DL) is a subfield of Machine Learning that uses artificial neural networks with multiple layers to learn complex patterns from data. DL has achieved significant breakthroughs in areas like image recognition, natural language processing, and speech recognition.

- Data Collection and Preparation: DL requires large amounts of data for training. Data cleaning and augmentation are crucial steps.

- Model Architecture Selection: DL models are designed based on specific problem requirements. Architectures like Convolutional Neural Networks (CNNs) for image processing and Recurrent Neural Networks (RNNs) for sequential data are commonly used.

- Model Training and Optimization: Training DL models involves feeding large datasets to the network and adjusting parameters to minimize errors. Techniques like backpropagation and gradient descent are used for optimization.

- Model Evaluation and Deployment: Evaluating the performance of DL models involves using metrics like accuracy, precision, recall, and F1-score. Deployment involves deploying trained models into real-world applications.

Predictive Analytics

Predictive Analytics utilizes statistical techniques and machine learning algorithms to analyze historical data and predict future outcomes. It has applications in areas like customer churn prediction, sales forecasting, and risk assessment.

- Data Collection and Preparation: This involves gathering historical data and preparing it for analysis. Data cleaning, transformation, and feature engineering are crucial steps.

- Model Selection and Training: Predictive analytics utilizes various statistical and machine learning algorithms, including regression models, time series models, and decision trees. The choice of algorithm depends on the specific problem and data characteristics.

- Model Evaluation and Validation: Evaluating the performance of predictive models involves using metrics like accuracy, precision, recall, and F1-score. Techniques like cross-validation and backtesting are used to validate model performance.

- Deployment and Monitoring: Once validated, predictive models are deployed into real-world applications. Monitoring model performance and retraining as needed is essential for maintaining accuracy.

| Use Case | Tools and Technologies | Considerations |

|---|---|---|

| Natural Language Processing (NLP) |

|

|

| Computer Vision |

|

|

| Machine Learning (ML) |

|

|

| Deep Learning (DL) |

|

|

| Predictive Analytics |

|

|

Trends and Future Directions in AI Technology Stacks

The AI technology stack is a constantly evolving landscape, shaped by advancements in hardware, software, and algorithms. Several emerging trends are influencing the development and deployment of AI systems, pushing the boundaries of what’s possible and driving innovation across industries.

Cloud-based AI Platforms

Cloud-based AI platforms are becoming increasingly popular, offering a range of benefits for businesses of all sizes. These platforms provide access to powerful computing resources, pre-trained models, and a suite of tools for building, deploying, and managing AI applications. This accessibility has democratized AI development, enabling even small businesses to leverage the power of AI without significant upfront investments.

- Scalability and Flexibility: Cloud platforms offer the flexibility to scale computing resources on demand, allowing businesses to adapt to fluctuating workloads and changing needs. This is crucial for AI applications, which often require significant processing power.

- Cost-Effectiveness: Cloud platforms eliminate the need for expensive hardware investments, reducing the overall cost of AI development and deployment. This makes AI more accessible to a wider range of organizations.

- Pre-trained Models: Cloud platforms provide access to a library of pre-trained models, saving developers time and effort in building models from scratch. These models can be fine-tuned for specific use cases, further accelerating AI development.

Edge Computing for AI

Edge computing brings AI processing closer to the data source, enabling real-time analysis and decision-making. This is particularly important for applications that require low latency, such as autonomous vehicles, industrial automation, and healthcare monitoring.

- Reduced Latency: Edge computing minimizes the time it takes for data to travel to a central server for processing, enabling faster responses and real-time decision-making.

- Improved Security: By processing data locally, edge computing reduces the risk of data breaches and security vulnerabilities associated with transmitting sensitive information to the cloud.

- Enhanced Privacy: Edge computing allows for data processing without sending it to a central server, enhancing data privacy and compliance with regulations.

Explainable AI (XAI)

Explainable AI (XAI) focuses on making AI systems more transparent and understandable. This is crucial for building trust in AI systems and ensuring that they are used responsibly. XAI techniques aim to provide insights into the decision-making process of AI models, allowing users to understand why a particular prediction was made.

- Transparency and Trust: XAI helps to build trust in AI systems by providing users with an understanding of how decisions are made. This is particularly important in industries such as healthcare, finance, and law enforcement, where trust and accountability are paramount.

- Improved Model Debugging and Validation: XAI techniques can help to identify biases and errors in AI models, making it easier to debug and validate them.

- Regulatory Compliance: As AI systems become more prevalent, regulations are emerging to ensure their transparency and accountability. XAI can help organizations comply with these regulations.

Responsible AI and Ethical Considerations

As AI becomes more powerful and integrated into our lives, it’s essential to address the ethical implications of its development and deployment. Responsible AI principles aim to ensure that AI systems are developed and used in a way that is fair, transparent, and beneficial to society.

- Bias Mitigation: AI models can inherit biases from the data they are trained on, leading to unfair or discriminatory outcomes. Responsible AI practices emphasize the importance of identifying and mitigating biases in AI systems.

- Privacy and Security: AI systems often handle sensitive personal data. Responsible AI practices prioritize the protection of privacy and security, ensuring that data is used ethically and responsibly.

- Accountability and Transparency: It’s crucial to establish clear accountability mechanisms for AI systems. This includes transparency in decision-making processes and mechanisms for addressing potential harm caused by AI systems.

Last Recap: Ai Technology Stack

As AI technology continues to advance at a breakneck pace, the AI technology stack will undoubtedly evolve and adapt to meet the ever-growing demands of various industries. From cloud-based platforms to edge computing and explainable AI, emerging trends are shaping the future of AI development and deployment. By embracing these advancements, we can unlock the full potential of AI to solve complex problems, drive innovation, and create a more intelligent and interconnected world.

An AI technology stack encompasses a wide range of tools and techniques, from machine learning algorithms to natural language processing. This stack is increasingly being applied to innovative fields like healthcare, and a prime example is the development of cutting edge hearing aid technology.

By analyzing sound patterns and filtering out noise, AI-powered hearing aids can provide a more personalized and effective listening experience. As AI technology continues to evolve, we can expect even more advancements in this area, making life easier and more enjoyable for millions of people.