Dynamic Control Technologies: Shaping Modern Systems

Dynamic control technologies are revolutionizing how we design and operate modern systems, from industrial automation to healthcare. These technologies leverage feedback loops and algorithms to adjust and optimize system behavior […]

Dynamic control technologies are revolutionizing how we design and operate modern systems, from industrial automation to healthcare. These technologies leverage feedback loops and algorithms to adjust and optimize system behavior in real-time, ensuring stability, efficiency, and responsiveness.

Dynamic control systems rely on principles of feedback, where sensors gather data about a system’s state, which is then processed by a controller to generate adjustments that maintain desired performance. This continuous loop allows for dynamic adaptation to changing conditions, making dynamic control technologies crucial for a wide range of applications.

Introduction to Dynamic Control Technologies

Dynamic control technologies are essential components of modern systems, enabling them to adapt and respond effectively to changing conditions. These technologies play a crucial role in optimizing performance, enhancing safety, and ensuring stability across various applications.

Dynamic control systems employ feedback mechanisms to monitor system outputs and adjust control inputs accordingly, aiming to achieve desired outcomes. This continuous feedback loop allows for real-time adjustments, ensuring that systems remain stable and operate within specified limits.

Real-World Applications of Dynamic Control Technologies

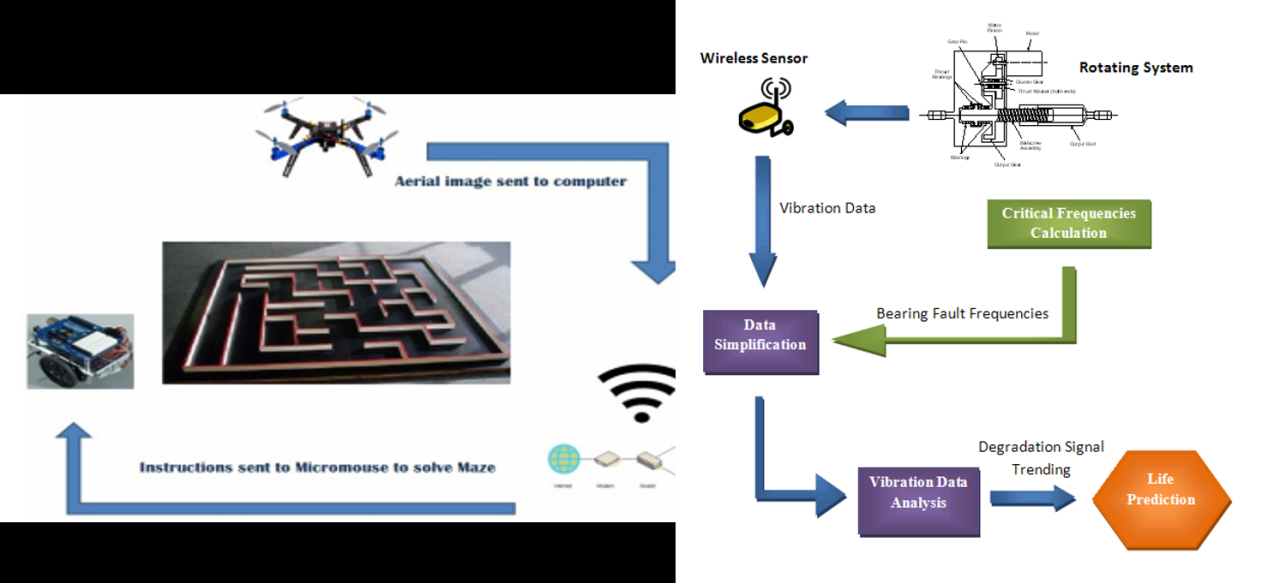

Dynamic control technologies find wide-ranging applications in various industries, contributing to advancements in automation, robotics, and process control.

- Robotics: Dynamic control algorithms are fundamental to the operation of robots, enabling them to navigate complex environments, perform precise tasks, and interact with objects safely. Examples include industrial robots used in manufacturing, surgical robots in healthcare, and autonomous vehicles in transportation.

- Process Control: In industries such as chemical processing, manufacturing, and power generation, dynamic control systems ensure optimal operation by regulating variables like temperature, pressure, and flow rate. This optimization leads to increased efficiency, reduced waste, and improved product quality.

- Aerospace: Aircraft and spacecraft rely heavily on dynamic control systems for stability, maneuverability, and precise trajectory control. These systems adjust control surfaces and thrust levels to maintain flight stability and execute complex maneuvers, ensuring safe and efficient operation.

- Automotive: Modern vehicles incorporate dynamic control technologies for enhanced safety and performance. Examples include anti-lock braking systems (ABS), electronic stability control (ESC), and adaptive cruise control (ACC), which contribute to vehicle stability, braking efficiency, and driver assistance.

Types of Dynamic Control Technologies

Dynamic control technologies encompass various approaches to regulate and optimize the behavior of systems over time. These technologies play a crucial role in diverse applications, from industrial automation to autonomous vehicles, enabling precise control and efficient operation.

PID Control

PID control, short for Proportional-Integral-Derivative control, is a widely used feedback control method. It relies on the error between the desired setpoint and the actual system output to adjust the control signal. The PID controller uses three terms:

- Proportional term: This term is proportional to the current error, providing immediate response to changes in the error.

- Integral term: This term accumulates the error over time, helping to eliminate steady-state errors and ensure the system reaches the desired setpoint.

- Derivative term: This term considers the rate of change of the error, anticipating future error trends and providing a damping effect.

PID controllers are known for their simplicity, robustness, and effectiveness in controlling a wide range of systems. They are commonly used in industrial processes, such as temperature control, motor speed regulation, and flow rate control. However, PID controllers may struggle to handle complex systems with nonlinearities or significant disturbances.

Adaptive Control

Adaptive control techniques address the limitations of traditional PID control by adjusting the controller parameters online based on the system’s changing dynamics. This allows the controller to adapt to uncertainties, disturbances, and variations in the system’s behavior. Adaptive control algorithms typically involve:

- System identification: Estimating the system’s dynamic parameters online.

- Controller adaptation: Adjusting the controller parameters based on the identified system dynamics.

Adaptive control offers improved performance in systems with time-varying parameters or unknown disturbances. Applications include robotic control, aerospace systems, and process control. However, adaptive control can be computationally intensive and requires careful tuning to ensure stability and robustness.

Predictive Control, Dynamic control technologies

Predictive control, also known as Model Predictive Control (MPC), uses a model of the system to predict future behavior and optimize the control actions over a specified horizon. MPC algorithms involve:

- System modeling: Developing a mathematical model of the system’s dynamics.

- Prediction: Forecasting future system states based on the model and current measurements.

- Optimization: Determining the optimal control actions that minimize a predefined objective function, such as minimizing tracking error or energy consumption.

MPC offers advantages in handling constraints, optimizing multiple objectives, and dealing with complex systems. It is widely used in industrial processes, such as chemical plants, power systems, and automotive applications. However, MPC requires accurate system models and can be computationally demanding.

Fuzzy Logic Control

Fuzzy logic control utilizes fuzzy sets and fuzzy logic to represent and manipulate uncertain or imprecise information. It allows for the design of controllers that can handle complex, nonlinear systems with linguistic rules. Fuzzy logic controllers involve:

- Fuzzification: Converting crisp input values into fuzzy sets.

- Rule base: Defining fuzzy rules that relate input and output fuzzy sets.

- Inference engine: Applying the rules to infer fuzzy outputs.

- Defuzzification: Converting fuzzy outputs into crisp control signals.

Fuzzy logic control offers advantages in handling uncertainty, incorporating human expertise, and achieving robust performance. It is applied in diverse fields, including consumer products, industrial processes, and medical devices. However, fuzzy logic controllers require careful design and tuning of the rule base and membership functions.

Design and Implementation of Dynamic Control Systems: Dynamic Control Technologies

Designing and implementing a dynamic control system involves a systematic approach to ensure the system effectively achieves its desired goals. This process involves understanding the system’s dynamics, selecting appropriate control algorithms, and tuning parameters to optimize performance.

Steps Involved in Designing and Implementing a Dynamic Control System

The design and implementation of a dynamic control system typically involve several distinct steps. These steps ensure that the system is developed, tested, and deployed effectively.

- System Modeling: The first step is to develop a mathematical model that accurately represents the behavior of the system. This model is crucial for understanding the system’s dynamics and predicting its response to different inputs. The model can be developed using various techniques, including differential equations, transfer functions, and state-space representations.

- Control Objectives and Specifications: Defining the control objectives and specifications is essential. This step clarifies what the system should achieve and how it should behave. Examples of control objectives include maintaining a specific temperature, regulating the speed of a motor, or stabilizing a robot’s position. Specifications define the performance criteria, such as settling time, overshoot, and steady-state error.

- Control Algorithm Selection: Choosing the appropriate control algorithm is a critical step. There are numerous control algorithms available, each with its strengths and weaknesses. The selection process considers factors such as the system’s dynamics, the control objectives, and the available hardware and software resources. Common control algorithms include proportional-integral-derivative (PID) control, state-feedback control, and adaptive control.

- Parameter Tuning: After selecting the control algorithm, it’s essential to tune its parameters to achieve the desired performance. Parameter tuning involves adjusting the gains and other parameters of the control algorithm to optimize the system’s response. This process often involves experimentation and iterative adjustments to find the optimal parameter values.

- Implementation and Testing: Once the control algorithm and parameters are determined, the system is implemented in hardware or software. This step involves translating the design into a real-world system using appropriate hardware components, sensors, actuators, and software. After implementation, the system is rigorously tested to ensure it meets the defined specifications and performs as expected.

- Deployment and Monitoring: The final step is deploying the system and continuously monitoring its performance. This step involves integrating the system into the intended environment and collecting data to assess its long-term performance. Monitoring allows for adjustments to the control system if necessary to maintain optimal performance over time.

Selection of Control Algorithms and Parameters

The selection of control algorithms and parameters is crucial for the successful implementation of a dynamic control system. This process requires a thorough understanding of the system’s dynamics, the control objectives, and the available resources.

- System Dynamics: The dynamics of the system significantly influence the choice of control algorithm. Systems with fast dynamics, such as those involving high-frequency signals, may require more complex control algorithms. Conversely, systems with slow dynamics can be effectively controlled using simpler algorithms.

- Control Objectives: The control objectives define the desired behavior of the system. For example, if the objective is to minimize overshoot, a control algorithm that prioritizes settling time might be preferred. Similarly, if the objective is to achieve a specific steady-state error, a control algorithm that incorporates integral action might be necessary.

- Available Resources: The availability of hardware and software resources influences the selection of control algorithms and parameters. Some algorithms require more computational power or specific hardware components, while others can be implemented on simpler platforms. The selection process should consider the limitations of the available resources.

Practical Examples of Dynamic Control Systems

Dynamic control systems are widely used in various industries and applications. Here are a few examples:

- Temperature Control in HVAC Systems: HVAC systems use dynamic control systems to regulate the temperature of buildings. Sensors monitor the temperature, and a control algorithm adjusts the heating or cooling system to maintain the desired temperature. This ensures a comfortable environment while optimizing energy consumption.

- Cruise Control in Automobiles: Cruise control systems use dynamic control systems to maintain a constant vehicle speed. Sensors measure the vehicle’s speed, and a control algorithm adjusts the engine throttle to maintain the set speed. This feature improves driver comfort and fuel efficiency.

- Robotics and Automation: Dynamic control systems are essential for robots and automated systems. They are used to control the movement of robotic arms, navigate autonomous vehicles, and regulate the operation of automated manufacturing processes. These systems ensure precise and reliable operation, enhancing productivity and efficiency.

Applications of Dynamic Control Technologies

Dynamic control technologies are widely applied across various industries, offering solutions to complex challenges and improving efficiency, safety, and performance. These technologies are essential for optimizing systems, ensuring stability, and achieving desired outcomes.

Industrial Automation

Dynamic control plays a crucial role in industrial automation, where precise and responsive control is essential for efficient production processes.

- Robotics: Dynamic control algorithms are used to control the motion of robots, ensuring precise movements, collision avoidance, and optimal performance. For instance, in manufacturing, robots equipped with dynamic control systems can perform complex tasks with high accuracy and repeatability, improving production efficiency and reducing errors.

- Process Control: Dynamic control systems are used to regulate variables like temperature, pressure, and flow rate in industrial processes. For example, in chemical plants, dynamic control systems help maintain optimal operating conditions, ensuring product quality and safety.

- Machine Tool Control: Dynamic control algorithms are used to control the movement of machine tools, enabling precise cutting, drilling, and other operations. This results in improved accuracy, reduced waste, and increased productivity.

Transportation

Dynamic control technologies are essential for improving the efficiency, safety, and comfort of transportation systems.

- Autonomous Vehicles: Dynamic control systems are critical for the autonomous navigation of vehicles, enabling them to perceive their surroundings, make decisions, and execute maneuvers safely and efficiently. Dynamic control algorithms are used for path planning, obstacle avoidance, and adaptive cruise control.

- Aircraft Control: Dynamic control systems are used to stabilize aircraft and maintain flight control during various flight conditions. These systems are crucial for ensuring aircraft safety and performance. For example, the autopilot system in modern aircraft relies on dynamic control algorithms to maintain altitude, heading, and speed.

- Traffic Management: Dynamic control systems are used to optimize traffic flow and minimize congestion in urban areas. These systems can dynamically adjust traffic signals based on real-time traffic conditions, improving efficiency and reducing travel times.

Energy Systems

Dynamic control technologies are used to optimize energy generation, transmission, and consumption.

- Power Grid Control: Dynamic control systems are used to regulate the flow of electricity in power grids, ensuring stability and reliability. These systems can respond to fluctuations in demand and supply, preventing blackouts and maintaining optimal grid performance.

- Renewable Energy Integration: Dynamic control systems are essential for integrating renewable energy sources, such as solar and wind power, into the grid. These systems can adjust the output of renewable energy sources to match demand and maintain grid stability.

- Energy Storage Management: Dynamic control systems are used to manage energy storage systems, such as batteries, to optimize energy utilization and minimize costs. These systems can charge and discharge batteries based on demand and supply, maximizing efficiency and minimizing energy waste.

Healthcare

Dynamic control technologies are finding increasing applications in healthcare, improving patient care and treatment outcomes.

- Medical Devices: Dynamic control systems are used in medical devices such as pacemakers, insulin pumps, and prosthetic limbs. These systems ensure precise and responsive control, improving patient health and well-being.

- Surgical Robotics: Dynamic control algorithms are used to control the movement of surgical robots, enabling minimally invasive procedures with high accuracy and precision. This reduces the risk of complications and improves patient recovery times.

- Drug Delivery: Dynamic control systems are used to optimize drug delivery, ensuring that patients receive the correct dosage at the right time. This improves treatment effectiveness and reduces side effects.

Future Trends in Dynamic Control Technologies

The field of dynamic control technologies is constantly evolving, driven by advancements in computing power, data analytics, and artificial intelligence. Emerging trends like artificial intelligence, machine learning, and cloud computing are poised to revolutionize the way we design, implement, and operate dynamic control systems. These technologies offer unprecedented opportunities to enhance system performance, optimize resource utilization, and create innovative solutions for complex challenges.

Artificial Intelligence and Machine Learning in Dynamic Control

Artificial intelligence (AI) and machine learning (ML) are transforming dynamic control systems by enabling them to learn from data, adapt to changing conditions, and make intelligent decisions. AI and ML algorithms can analyze vast amounts of data from sensors, actuators, and other system components to identify patterns, predict future behavior, and optimize control strategies in real-time.

- Adaptive Control: AI and ML can create adaptive control systems that adjust their parameters based on real-time data and environmental changes. This allows for improved system performance and robustness in dynamic and unpredictable environments.

- Predictive Maintenance: AI and ML algorithms can analyze sensor data to predict potential failures in system components. This enables proactive maintenance, reducing downtime and extending the lifespan of critical assets.

- Autonomous Control: AI and ML can empower dynamic control systems to operate autonomously, making decisions without human intervention. This is particularly relevant in applications like autonomous vehicles, robotics, and industrial automation.

Cloud Computing for Dynamic Control Systems

Cloud computing offers a powerful platform for developing, deploying, and managing dynamic control systems. The scalability, flexibility, and cost-effectiveness of cloud computing resources enable the implementation of sophisticated control algorithms and the processing of large datasets.

- Remote Monitoring and Control: Cloud computing allows for remote monitoring and control of dynamic systems, regardless of geographical location. This enables centralized management and real-time access to system data.

- Data Storage and Analytics: Cloud-based platforms provide secure and scalable data storage solutions for dynamic control systems. This enables advanced data analytics and the development of AI and ML models.

- Simulation and Optimization: Cloud computing resources can be used for virtual simulation and optimization of dynamic control systems. This allows for testing and refining control strategies before deployment in real-world environments.

Applications of Future Trends in Dynamic Control Technologies

The integration of AI, ML, and cloud computing is expected to revolutionize dynamic control applications across various sectors:

- Manufacturing: AI-powered control systems can optimize production processes, reduce waste, and improve efficiency. Cloud computing enables remote monitoring and control of manufacturing facilities, enhancing operational flexibility and responsiveness.

- Energy: AI and ML algorithms can optimize energy consumption in buildings, factories, and power grids. Cloud-based platforms can facilitate the integration of renewable energy sources and improve grid stability.

- Transportation: Autonomous vehicles rely heavily on AI and ML for navigation, decision-making, and safety. Cloud computing provides a platform for data sharing and traffic management in smart cities.

- Healthcare: AI-powered control systems can optimize medical devices, improve patient monitoring, and personalize treatment plans. Cloud computing enables remote patient care and facilitates data sharing between healthcare providers.

Wrap-Up

The field of dynamic control technologies is constantly evolving, with advancements in artificial intelligence, machine learning, and cloud computing driving innovation. These emerging trends are paving the way for more intelligent and adaptable control systems, promising to further enhance efficiency, safety, and performance across industries. As we continue to explore the potential of dynamic control, we can anticipate even more transformative applications that will shape our future.

Dynamic control technologies are essential for optimizing processes across various industries. They enable real-time adjustments based on changing conditions, leading to greater efficiency and productivity. This is particularly relevant in the wholesale sector, where implementing advanced wholesale technology solutions can streamline operations and enhance supply chain management.

By leveraging dynamic control technologies, wholesalers can gain valuable insights into their operations and make data-driven decisions that ultimately improve their bottom line.